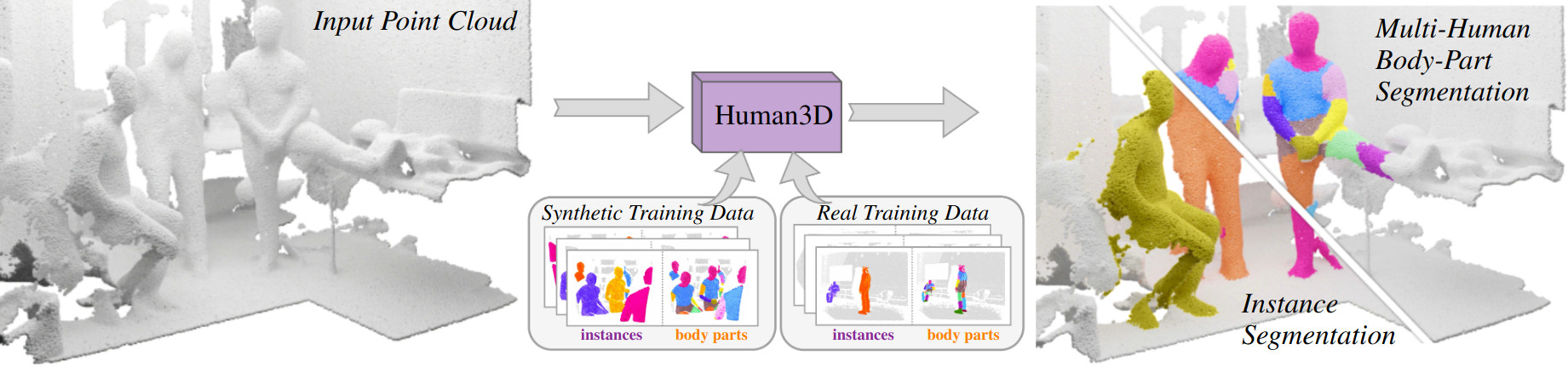

TL;DR: We propose the first multi-human body-part segmentation model, called Human3D 🧑🤝🧑, that

directly operates on 3D scenes.

In an extensive analysis, we validate the benefits of training on synthetic data on multiple baselines

and tasks.

Segmenting humans in 3D indoor scenes has become increasingly important with the rise of human-centered robotics and AR/VR applications. To this end, we propose the task of joint 3D human semantic segmentation, instance segmentation and multi-human body-part segmentation. Few works have attempted to directly segment humans in cluttered 3D scenes, which is largely due to the lack of annotated training data of humans interacting with 3D scenes. We address this challenge and propose a framework for generating training data of synthetic humans interacting with real 3D scenes. Furthermore, we propose a novel transformer-based model, Human3D, which is the first end-to-end model for segmenting multiple human instances and their body-parts in a unified manner. The key advantage of our synthetic data generation framework is its ability to generate diverse and realistic human-scene interactions, with highly accurate ground truth. Our experiments show that pre-training on synthetic data improves performance on a wide variety of 3D human segmentation tasks. Finally, we demonstrate that Human3D outperforms even task-specific state-of-the-art 3D segmentation methods.

Remarkably, our approach generalizes to out-of-distribution examples. Although trained on synthetic data and real Kinect depth data, Human3D shows promising results on reconstructed point clouds scanned with an iPhone LiDAR sensor.

Human3D shows smooth and robust predictions on videos recorded with the Kinect Depth Sensor.

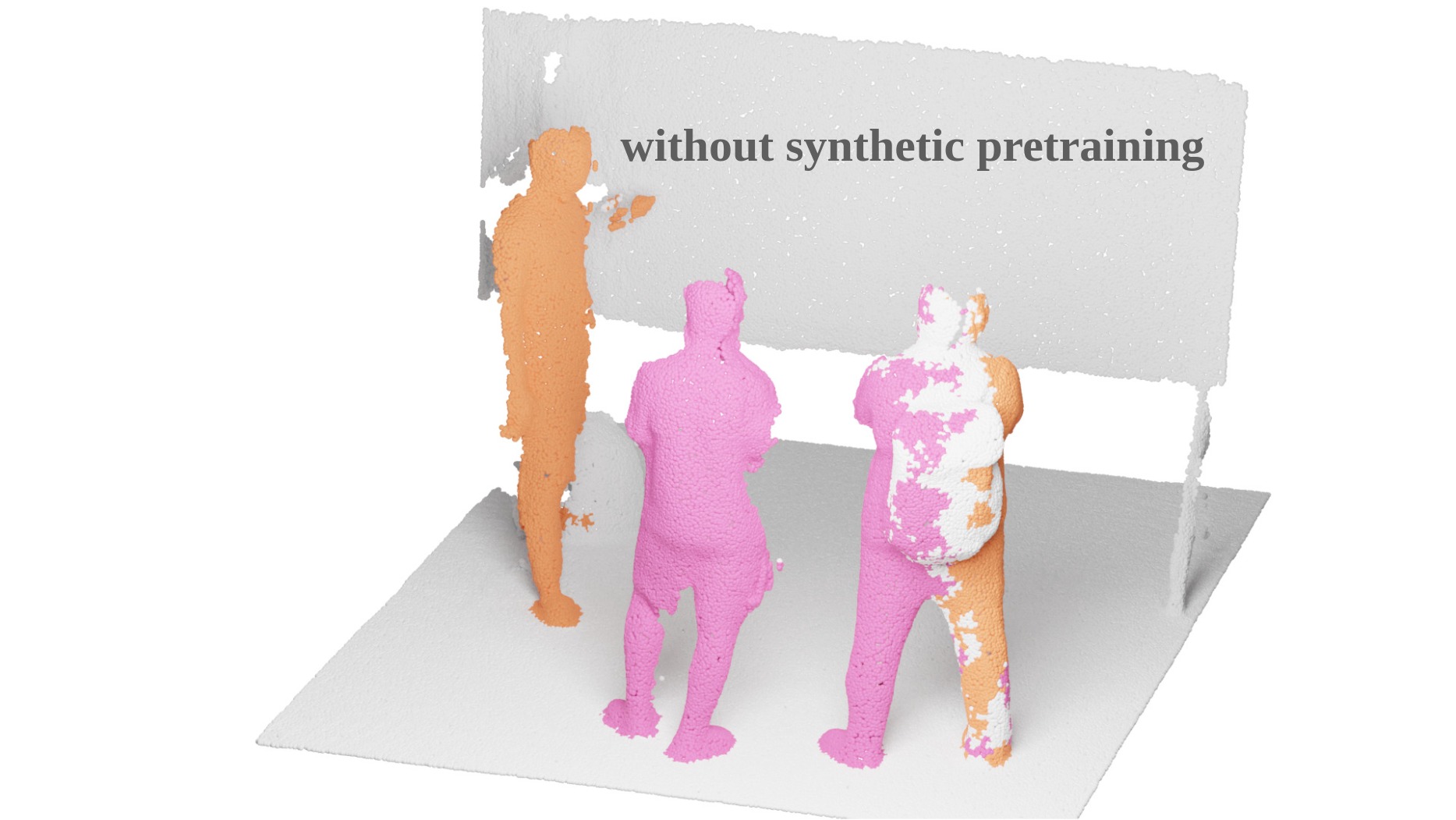

Only EgoBody data:

We observe that models trained only on EgoBody data do not generalize to scenes with more than 2 humans. Here we can

see that the instance masks of two people leak into the third person's mask on the right.

The reason for this is that the EgoBody dataset only contains scenes with less than 3 people at the same time. When

only trained on EgoBody, Human3D inevitably learns this bias and consequently fails for scenes with more than 2

people.

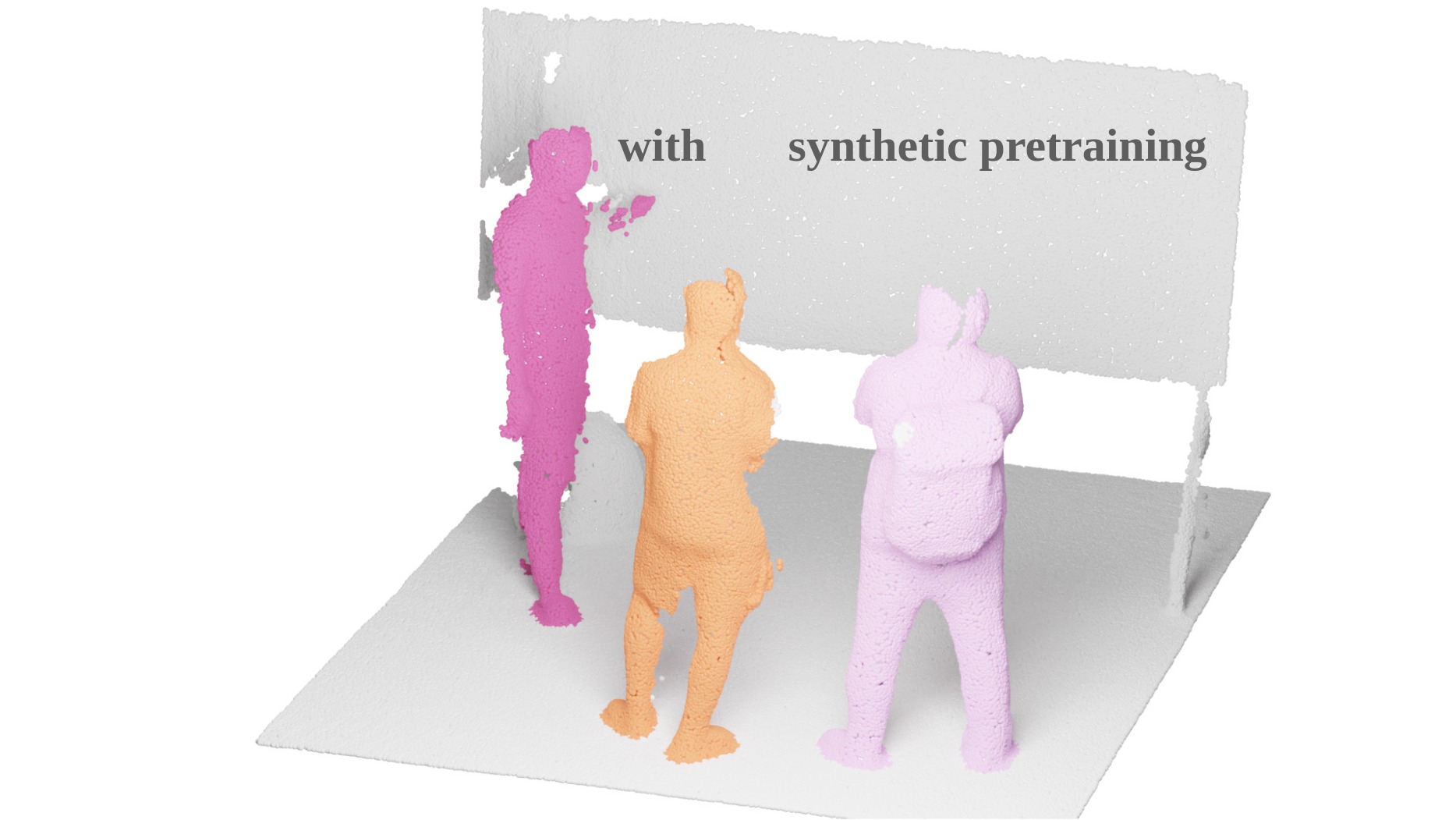

Pretrained with synthetic data:

In contrast, our synthetic dataset consists of scenes with up to 10 people. Human3D, pre-trained on synthetic data

and fine-tuned on real EgoBody data, shows significantly better results for scenes with a larger number of people.

We conclude that pre-training with synthetic data helps to segment humans in 3D point clouds!

@inproceedings{human3d,

title = {{3D Segmentation of Humans in Point Clouds with Synthetic Data}},

author = {Takmaz, Ay\c{c}a and Schult, Jonas and Kaftan, Irem and Ak\c{c}ay, Mertcan

and Leibe, Bastian and Sumner, Robert and Engelmann, Francis and Tang, Siyu},

booktitle = {{Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)}},

year = {2023}

}